The Ethics of AI in Software Development: Navigating the Grey Areas

Table Of Content

- The Grey Areas: Why Ethics Matter in AI Development

- Example: Facial Recognition Technology

- The Challenge of Bias in AI

- Example: AI Hiring Algorithms

- Transparency: The Black Box Problem

- Example: Deep Learning Models

- The Privacy Paradox

- Navigating the Grey Areas

- Global Impacts: The Ethics of AI on a Global Scale

- Future Outlook According to Tech Giants

- My Take

Artificial Intelligence (AI) is like that superpower we all dreamt of as kids—it's smart, it's fast, and it can do things we never thought possible. But, like all superpowers, it comes with a set of responsibilities and, more importantly, ethical dilemmas. In the world of software development, where lines of code are turning into lines of moral questions, navigating the ethics of AI is becoming increasingly crucial. Welcome to the grey areas of AI in software development—where the lines between right and wrong are not just blurred but sometimes completely invisible.

The Grey Areas: Why Ethics Matter in AI Development

Ethics in AI isn't just about making sure your AI doesn't turn into Skynet and start a robot apocalypse (though, let’s be real, that’s a valid concern too). It's about ensuring that the technologies we create are used responsibly, fairly, and for the greater good. But what does that mean in practical terms for developers?

For starters, AI systems are only as good—or as bad—as the data they're trained on. Bias in data can lead to biased AI, which can perpetuate discrimination and inequality. Then there's the issue of transparency: how do we make sure that the decisions made by AI are understandable and accountable? And let's not forget privacy—AI's hunger for data can easily cross into invasive territory.

These are just a few of the ethical challenges developers face when working with AI. The tricky part? There's no one-size-fits-all answer. What might be ethical in one context could be questionable in another. It's a constant balancing act, where developers must weigh the potential benefits of AI against its possible harms.

Example: Facial Recognition Technology

Facial recognition technology has faced significant ethical scrutiny due to its potential for misuse in surveillance. For instance, the use of facial recognition by law enforcement agencies has raised concerns about privacy and racial bias.

In 2020, IBM announced that it would stop offering general-purpose facial recognition technology, citing ethical concerns about its use in mass surveillance and racial profiling.

Source: IBM Halts Facial Recognition

The Challenge of Bias in AI

One of the most talked-about ethical issues in AI is bias. AI systems learn from data, and if that data is biased, the AI will be too. This isn’t just a theoretical problem; it’s one we’ve seen play out in the real world.

Take, for example, the case of facial recognition technology. Several studies have shown that these systems are less accurate when identifying people of color, particularly women. The reason? The training data used to develop these systems often lacks diversity, leading to biased outcomes.

This isn't just a technical issue—it's an ethical one. If AI is used in applications like law enforcement or hiring, biased algorithms can have serious consequences, perpetuating systemic inequalities. Developers must be vigilant in ensuring that the data they use is representative and that their AI systems are designed to minimize bias.

Example: AI Hiring Algorithms

AI hiring tools have been criticized for perpetuating bias. For example, Amazon’s AI recruiting tool was found to favor male candidates over female ones because it was trained on resumes from a male-dominated industry.

"The danger of AI is not that it will become malevolent, but that it will become too competent. A superintelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we’re in trouble." - Nick Bostrom

Transparency: The Black Box Problem

Another major ethical concern with AI is transparency—or the lack thereof. AI systems, particularly those based on machine learning, are often described as "black boxes." This means that while they can make incredibly accurate predictions or decisions, understanding how they arrived at those decisions can be nearly impossible.

This lack of transparency raises serious ethical questions, especially in high-stakes situations like healthcare or criminal justice. If an AI system denies someone a loan or recommends a prison sentence, how can we be sure that decision was fair? And if we can’t understand the decision-making process, how can we hold anyone accountable?

Developers have a responsibility to ensure that their AI systems are as transparent as possible. This might involve using simpler, more interpretable models, or developing tools that allow users to understand and question AI decisions.

Example: Deep Learning Models

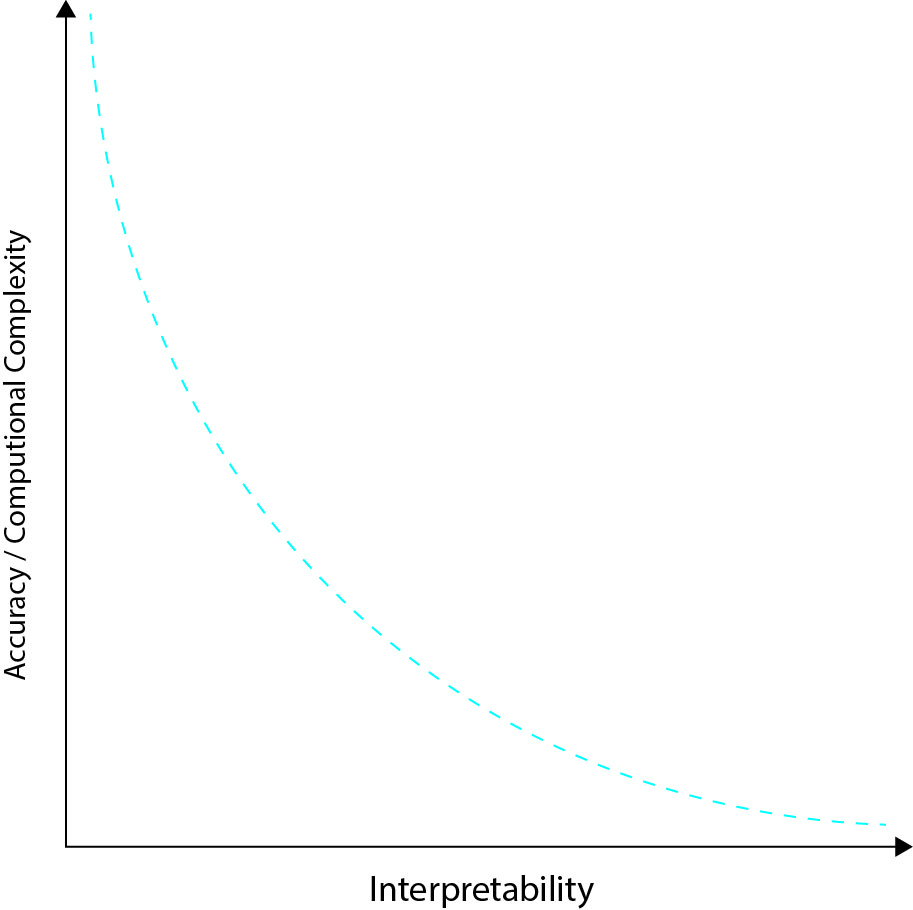

Deep learning models, such as those used in image recognition, are often described as "black boxes" because their decision-making processes are not easily interpretable.

The Chart shows that the balance between accuracy, computational complexity, and interpretability involves trade-offs. At one extreme, you achieve exceptional accuracy, but at the cost of extremely high computational demands and poor interpretability. A slightly less extreme option provides very good accuracy with very high computational requirements and moderate interpretability. A balanced approach offers good accuracy, manageable computational needs, and satisfactory interpretability. Lastly, a more interpretable model sacrifices some accuracy and computational efficiency but remains highly understandable.

The Privacy Paradox

AI’s insatiable appetite for data is another ethical minefield. On the one hand, more data means better AI models. On the other hand, the collection and use of that data can easily infringe on privacy.

Consider smart home devices that use AI to learn your habits and preferences. These devices can make your life more convenient, but they also collect a staggering amount of personal data. Where does that data go? Who has access to it? And how is it being used?

These are questions developers must consider when building AI systems. It's not enough to ensure that data is collected legally; developers must also think about how to protect that data and use it in a way that respects users' privacy.

News: In 2022, Apple introduced new privacy features in iOS 15 that limit app tracking and data collection, addressing privacy concerns. - Source: Apple's Privacy Features

Navigating the Grey Areas

Given these challenges, how can developers navigate the ethical grey areas of AI? Here are a few principles to keep in mind:

-

Do No Harm: This is the most basic ethical principle, but it's worth reiterating. When developing AI systems, always consider the potential harm they could cause. If there's a risk that your AI could be used to discriminate, invade privacy, or otherwise cause harm, you need to rethink your approach.

-

Bias Detection and Mitigation: Actively seek out and address bias in your data and models. This might involve using more diverse training data, testing your models for biased outcomes, and making adjustments as needed.

-

Transparency: Aim to make your AI systems as transparent as possible. This could mean using simpler models that are easier to interpret or developing tools that allow users to understand and question AI decisions.

-

Privacy Protection: Be mindful of the data you're collecting and how it's being used. Implement strong data protection measures, and always give users control over their data.

Global Impacts: The Ethics of AI on a Global Scale

The ethical considerations of AI aren’t just local issues—they have global implications. AI is being used in ways that affect people all over the world, and the ethical challenges we face in one country might look very different elsewhere.

For example, in countries with strict government surveillance, AI-driven technologies can be used to track and control citizens in ways that would be considered unethical in more democratic societies. On the other hand, AI also has the potential to address global challenges like climate change and poverty—if used responsibly.

As AI continues to spread across the globe, developers must be mindful of these different contexts and the unique ethical challenges they present.

Future Outlook According to Tech Giants

Looking to the future, it’s clear that the ethical challenges of AI are only going to become more complex. As AI systems become more advanced and integrated into our daily lives, the potential for both harm and good will increase.

"AI is going to change the world, but we need to make sure it changes it for the better. That means being responsible, being ethical, and thinking about the long-term consequences of what we’re building." - Sundar Pichai

The tech giants are well aware of this, and many are taking steps to address the ethical challenges of AI. For example, Google has established an AI Ethics Board to oversee its AI projects and ensure they align with ethical standards. Microsoft has also been vocal about the need for ethical AI, advocating for transparency, fairness, and accountability in AI development.

My Take

Navigating the ethics of AI in software development is like walking a tightrope. On one side, there’s the incredible potential of AI to make our lives better, solve complex problems, and drive innovation. On the other side, there’s the very real risk of causing harm, whether through biased algorithms, loss of privacy, or lack of accountability.

As developers, it’s our responsibility to find that balance. We need to be constantly vigilant, asking ourselves not just if we can build something, but if we should. It’s not always an easy task, and there will undoubtedly be missteps along the way. But if we’re thoughtful, responsible, and committed to doing the right thing, we can harness the power of AI for good.

And who knows? Maybe one day, we’ll look back at this era of AI development and be proud of the ethical choices we made. Or, at the very least, we’ll be able to say we did our best to navigate the grey areas with integrity and care.